Unveiling the Potential of Transfer Learning with T5 – Google’s NLP Breakthrough

Introduction: NLP is fast changing, and Google’s T5 (Text-to-Text Transfer Transformer) is a new paradigm. T5 has great promise in transfer learning, and this article discusses its design, capabilities, and impact on NLP technology.

The Transfer Learning Role of T5:

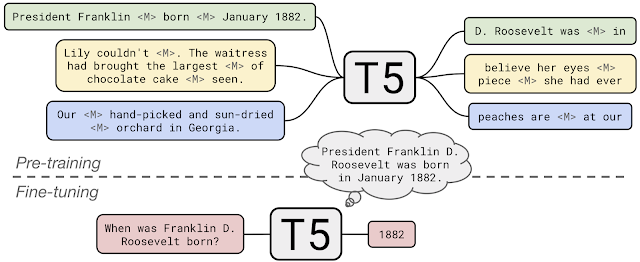

T5 uses transfer learning, a strong machine learning approach, to help NLP models transfer information across tasks. T5’s innovative approach treats all NLP jobs as text-to-text issues, offering a universal foundation for language processing difficulties.

Key T5 Features:

T5 uses the Transformer architecture, a staple of current NLP models. The universal text-to-text technique simplifies design and training by defining every job as converting input text to output text.

Self-Attention Mechanism: T5’s self-attention mechanism helps it comprehend and create coherent and contextually relevant content by weighing the value of words in a text sequence.

T5 uses positional encoding to comprehend word order in sequences and context in text data.

T5 Versatility:

T5’s unified text-to-text formulation makes it adaptable for many NLP applications. T5 can handle language translation, summarization, sentiment analysis, and question-answering without specialist models.

Effects of T5 on NLP Research:

Google’s T5 has drastically changed NLP research, with major ramifications for scientists and academics. Notable features include:

Multitask Learning: T5 lets researchers work on many NLP tasks at once, speeding up model building for varied language processing issues.

Scientists may customize T5 on domain-specific datasets to match scientific or specialized domain language and terminology.

Enhanced Information Extraction: T5 is ideal for data mining and knowledge discovery in scientific literature since it extracts structured information from unstructured material.

Conclusion:

Google’s Text-to-Text Transfer Transformer, T5, pioneered NLP and transfer learning. T5’s dynamic architecture, self-attention mechanisms, and text-to-text formulation provide scientists, researchers, and NLP practitioners a transformational tool for language processing problems. We may expect a bright future where text interpretation and creation are pushed further, opening up new opportunities for scientific inquiry and information processing as T5 shapes NLP.

Source: https://blog.research.google/2020/02/exploring-transfer-learning-with-t5.html?m=1.